pymupdf4llm-mcp: Enhancing LLM Agents with High-Quality PDF Context

Wh1isper·May 8, 2025

Introduction

In the rapidly evolving landscape of AI agents, providing high-quality context to Large Language Models (LLMs) is crucial for their effectiveness. One common challenge is extracting structured, usable content from PDF documents - a format that's ubiquitous in academic, business, and technical domains.

As the developer of lightblue-ai, I found myself needing a reliable, LLM-friendly PDF parsing tool that could preserve both textual content and the spatial relationships of elements like images. After evaluating various options, pymupdf4llm emerged as the standout solution, offering complete textual extraction and accurate image placement.

While I could have directly implemented this tool within my own agent framework, I realized the value of making pymupdf4llm accessible to other agent developers. This is where the Model Context Protocol (MCP) became invaluable - it provides a standardized way for closed agent systems to integrate external tools.

What is MCP?

Model Context Protocol (MCP) is an open standard that creates a unified approach for applications to supply context to large language models. You can think of MCP as the 'universal connector' of the AI world - similar to how USB-C provides a standard interface for connecting devices to various peripherals, MCP establishes a consistent framework for connecting AI models to diverse information sources and tools. This standardization allows developers to integrate external data, knowledge bases, and utilities with language models in a consistent, interoperable way regardless of the specific AI system being used.

By creating an MCP wrapper for pymupdf4llm, I could enable other developers to immediately leverage official best practices for PDF parsing without needing to spend time discovering optimal configurations and implementations themselves.

This led to the creation of pymupdf4llm-mcp, a Model Context Protocol (MCP) server that makes this powerful PDF parsing capability easily accessible to LLM agents.

What is pymupdf4llm-mcp?

pymupdf4llm-mcp is an MCP server that wraps the functionality of PyMuPDF4LLM, providing a standardized interface for converting PDF documents to markdown format. This conversion is specifically optimized for consumption by Large Language Models.

The tool leverages the robust PDF parsing capabilities of PyMuPDF and enhances it with features specifically designed for LLM consumption:

- Complete Textual Content Extraction: It preserves the semantic structure of documents, including headings, paragraphs, and lists.

- Accurate Image Placement: Images are extracted and referenced in the markdown output, maintaining their relationship to the surrounding text.

- Standardized Output: The consistent markdown format makes it easy for LLMs to process and understand the document structure.

- MCP Integration: As an MCP server, it can be easily integrated with various LLM agent frameworks that support the Model Context Protocol.

By converting PDFs to markdown, pymupdf4llm-mcp transforms opaque binary documents into a format that LLMs can effectively process, understand, and reason about.

Installation Guide

Getting started with pymupdf4llm-mcp is straightforward via uv, a fast Python package installer and resolver that makes dependency management seamless.

First, install uv if you don't have it already:

# Install uv using pip

pip install uv

# Or using the official installer script

curl -sSf https://astral.sh/uv/install.sh | bashThen configure your MCP client (such as Cursor, Windsurf, or a custom agent) to use pymupdf4llm-mcp, add the following configuration:

{

"mcpServers": {

"pymupdf4llm-mcp": {

"command": "uvx",

"args": [

"pymupdf4llm-mcp@latest",

"stdio"

],

"env": {}

}

}

}Usage Examples

Now I'll show you how to use pymupdf4llm-mcp in a simple way: Building an arxiv summary agent via pydantic-ai. This example demonstrates how to integrate pymupdf4llm-mcp into an LLM agent workflow to automatically download and summarize academic papers.

Python code:

from typing import Annotated

from pydantic import Field

from pydantic_ai import Agent

from pydantic_ai.mcp import MCPServerStdio

import httpx

server = MCPServerStdio(

"uvx",

args=[

"pymupdf4llm-mcp@latest",

"stdio",

],

)

agent = Agent(

"bedrock:us.anthropic.claude-3-7-sonnet-20250219-v1:0", mcp_servers=[server]

)

@agent.tool_plain

async def download_web(

url: Annotated[str, Field(description="URL to download")],

save_path: Annotated[

str, Field(description="Absolute path where the file should be saved")

],

) -> dict[str, str]:

"""

Download a file from a URL and save it to the specified path

"""

try:

response = httpx.get(url)

response.raise_for_status()

with open(save_path, "wb") as f:

f.write(response.content)

except Exception as e:

return {

"success": False,

"error": str(e),

"message": f"Failed to download {url}",

}

else:

return {

"success": True,

"message": f"Successfully downloaded {url} to {save_path}",

}

async def main():

async with agent.run_mcp_servers():

result = await agent.run(

"Please summarize the paper: https://arxiv.org/pdf/1706.03762"

)

print(result.output)

if __name__ == "__main__":

import asyncio

asyncio.run(main())Once the code is run it will summarize the PDF paper using Claude provided with the parsed output from PyMuPDF4LLM. This all happens as the agent is configured with Claude and has the pymupdf4llm-mcp server associated with it.

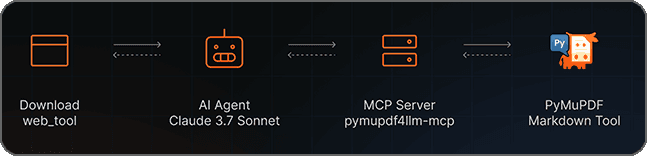

How the Example Works

- Setting up the MCP Server: We initialize an MCP server that runs pymupdf4llm-mcp using the

stdiotransport method. - Creating the Agent: We create a Claude 3.7 Sonnet agent and connect it to our MCP server.

- Implementing a Download Tool: We define a

download_webtool that fetches PDF files from URLs. - Behind the Scenes: When the agent runs, it:

- Downloads the PDF from arXiv using our custom tool

- Uses pymupdf4llm-mcp to convert the PDF to markdown format

- Processes the markdown content to understand the paper

- Generates a comprehensive summary

This workflow enables LLM agents to work with PDF content almost as effectively as they work with plain text, opening up vast repositories of knowledge that were previously difficult to access programmatically.

The Role of Context in LLM Agents

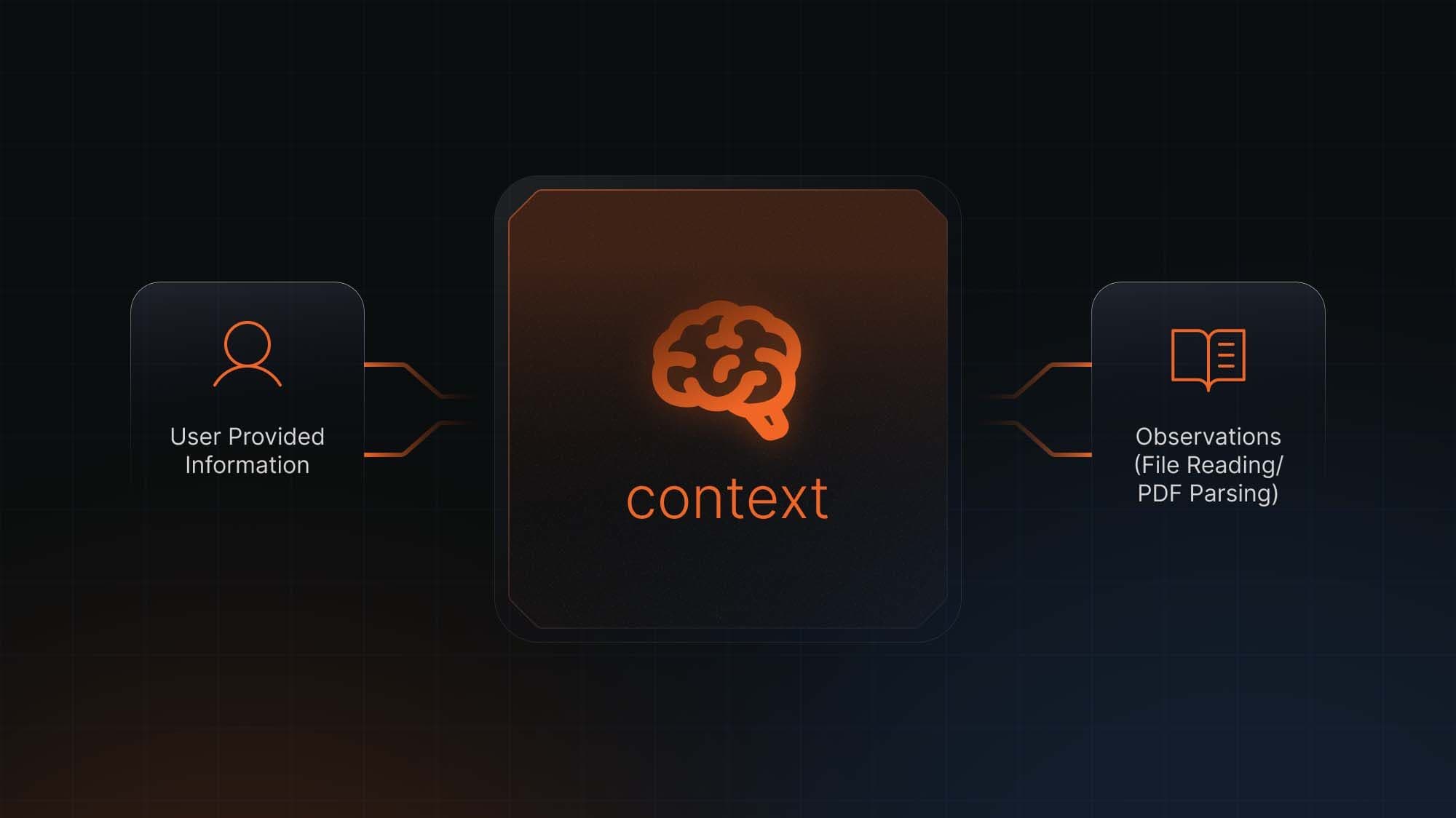

Current LLM agents have two key components: tools and context. While tools enable agents to interact with the world and perform actions, context is what informs their understanding and decision-making.

Context can come from two sources:

- User-provided information: Direct input from users that frames the task or provides background.

- Observations: Information gathered through tool use, such as web searches, file reading, or in this case, PDF parsing.

The quality of this context directly impacts the quality of the agent's outputs. When dealing with PDFs, poor parsing can lead to:

- Lost structural information (headings, sections, etc.)

- Missing or misplaced images

- Garbled text or formatting issues

- Loss of tables and other structured data

pymupdf4llm-mcp addresses these issues by providing high-quality, structured context from PDF documents. This enables LLMs to:

- Understand the document's organization and flow

- Reference specific sections or figures accurately

- Maintain the relationship between text and visual elements

- Process information in a way that preserves the author's intended meaning

As Claude 3.7 Sonnet and other advanced models demonstrate increasing proficiency at utilizing tools to gather context, the importance of high-quality context providers like pymupdf4llm-mcp becomes even more significant.

Conclusion

By bridging the gap between the ubiquitous PDF format and the markdown format that LLMs can effectively process, pymupdf4llm-mcp removes a significant barrier to incorporating valuable document-based knowledge into AI agent workflows.

I hope to see more tools in the community that focus on providing high-quality context to LLMs, as this is a crucial foundation for building truly capable and helpful AI systems.

Try pymupdf4llm-mcp

Clone from Github: github.com/pymupdf/pymupdf4llm-mcp

Install from PyPI: pypi.org/project/pymupdf4llm-mcp/